BINARY TO BCD CONVERSION

(Last Modified: 04 November 2010 06:08:16 PM )

BCD is Binary Coded Decimal and is simply a means of representing the digits of a number individually and storing the results for each digit separately as a binary value. As a result, each digit requires 4 bits and only ten of the values, 0-9, are used. For instance, the largest value that can be represented by a twelve bit straight binary value encoding, namely 8191 (0x0FFF) would require 16-bits (four decimal digits) and be represented as:

| 8 | 1 | 9 | 1 | ||||||||||||

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 |

At one time, BCD representation was very widespread since it lends itself well to the displaying values using resource-limited hardware. However, as the capabilities of hardware has improved and the cost come down, the use of BCD has largely withered in favor of using the computationally-friendly two's complement integer and IEEE floating point representations.

None-the-less, values still have to be manipulated in such a way as to permit displaying them in the human-friendly decimal (base-10) representation. There are many ways to do this, ranging from extremely brute force to exceptionally elegant. For most users the implementation details are hidden behind library calls. But someone has to write these libraries and designers working at the hardware level may not have libraries available to them and therefore have to build there own.

Converting from a straight binary value to a BCD representation is one of the fundamental building blocks for such a library. Most algorithms for doing this are iterative in nature and involve "moving" the value from one representation to the other in a manner appropriate to each representation. In other words, we start with the value V in the representation we are converting from and 0 in the representation we are converting to. We then subtract X from V and add X to 0 choosing X in such a way that performing the operations are simple in both representations. We repeat this process systematically, each time subtracting the new value of X from the residual value in the old representation and adding it to the partial value in the new representation and choosing values for X that eventually result in the residual value stored in the first representation reaching 0, at which point we have the value V in the new representation. Therefore, to understand how any of these algorithms work we must have an adequate understanding of how to do the necessary arithmetic in both representations. We will assume that we either know how to perform such operations in straight binary (or can rely on the processor's intrinsic capabilities) but that we must implement the necessary BCD operations using appropriate binary operations. For our purposes here, the only operations we need are adding two BCD values together and multiplying a BCD value by two. However, first we will examine a brute force approach along with some simples improvements to that approach.

Let's say that we have an unsigned binary integer value that is, at most, 16 bits stored in the variable X. This means that we need to be able to end up with a BCD representation having, at most, five digits since the value must be less than 65,536. Let's store our BCD digits in an array such that the ones digit is at bcd[0] and the ten-thousands digit is at bcd[4]. Our strategy for performing the conversion is simple:

Examination of the above algorithm shows that it lends itself well to a fairly tight loop. Also, notice that the last step can be replaced with a straight assignment of the remaining value in X to bcd[0]. The code to do this in C might look like the following:

i = DIGITS-1;

v = VMAX;

while (i > 0)

{

bcd[i] = 0;

while (x >= v)

{

x = x - v;

bcd[i] = bcd[i] + 1;

}

v = v / 10;

i = i - 1;

}

bcd[0] = x;

The parameter "DIGITS" is a constant defined at the top of the source code file and is set equal to the maximum number of digits in the BCD results. The value VMAX is also a constant that is set equal to 10^(DIGITS-1). These two parameters make the code trivial to modify if a different number of digits is desired. For the conversions discussed here, DIGITS is equal to 5 and VMAX is equal to 10,000.

The use of for() loops and shorthand statements has been avoided so that people not familiar with C syntax are still likely to be able to follow the code and translate it into their language of choice. Leveraging the syntax features of C, this can be compacted to:

for (bcd[i=DIGITS-1]=0, v=VMAX; i; bcd[--i]=0, v/=10)

{

while (x >= v)

{

x -= v;

bcd[i]++;

}

}

bcd[0] = x;

So how efficient is this algorithm? Not very, since it is most likely being used to format data for output in human readable format, it is probably fast enough for most live applications, such as writing to a display device. Notice that the outer loop runs exactly one time less than the maximum possible number of digits in the BCD result, even if the value being converted is 0. For each BCD digit, the inner loop runs a number of times equal to the eventual value of that digit. So the values of x that result in the greatest number of operations are 59,990 through 59,999. Each will result in the inner loop being invoked 23 times. Now, not all operations take the same amount of time to execute (at least on most machines). The most expensive operation, for most platforms, is the division operation. Fortunately, it is only performed once for each iteration of the outer loop, so four times max. However, on a particular processor a single division operation may require more time to execute than all of the other operations combined. Plus, many platforms that would use an algorithm like this, such as embedded microcontrollers or programmable logic devices, do not support division at all, at least not natively. They may or may not support multiplication.

Let's look as some possible ways to improve this. If we really want to avoid multiplication and division, we can create a look-up table with all of the possible values of v that we might need. As an aside, we could also construct this table dynamically since we only have to do it once; doing so would actually make it trivial to convert to BCD representations of x in other, smaller, number bases, which could be useful for some applications. Next, we don't have to subtract off values from x equal to the weight of the BCD digit we are presently working on. Instead, we can first grab a large chunk and then grab progressively smaller chunks. For example, when working on the thousands digit, we can see if we can pull over 8000, then 4000, then 2000, and finally 1000.

w[0] = 1;

w[1] = 10;

w[2] = 100;

w[3] = 1000;

w[4] = 10000;

...

i = DIGITS-1;

while (i > 0)

{

bcd[i] = 0;

k = 3;

v = w[i]<<k; // This performs v = (2^k)*w[i] efficiently

while (k >= 0)

{

if (x >= v)

{

x = x - v;

bcd[i] = bcd[i] + (1<<k); // 1<<k equals 2^k

}

k = m - 1;

v = v >> 1; // This performs v = v/2 efficiently

}

i = i - 1;

}

bcd[0] = x;

Notice that w[0] never gets used since we are using the knowledge that the least significant digit can be set equal to the residual value of x in order to eliminate the final pass through the loop. The code could be tweaked to eliminate it and save the storage space.

A special note should be taken of the line

bcd[i] = bcd[i] + (1<<k); // 1<<k equals 2^k

It would be very easy to think that this should be the same as

bcd[i] = bcd[i] + 1<<k; // 1<<k equals 2^k

However, in C, addition and subtraction have higher precedence than the bit shift operators. This is true in most, if not all, languages that have such operators. The reason is that, when mixed with arithmetic operators, they are primarily used for shifting the final results of arithmetic operations. This underscores the importance of not letting the compiler do too much of our thinking for us: make liberal use of parentheses to ensure that the compiler has no choice but to evaluate expressions as we intended.

Once again we will compact this code using the syntax features of C to get:

w[0] = 1;

w[1] = 10;

w[2] = 100;

w[3] = 1000;

w[4] = 10000;

...

for (i =DIGITS-1; i; i--)

for (bcd[i]=0, v=w[i]<<(k=3); k>=0; k--,v>>=1)

if (x >= v)

{

x -= v;

bcd[i] += 1<<k;

}

bcd[0] = x;

It should be noted that the above code was compacted to a much greater degree than is probably wise since, most people will agree, it has lost a great deal of readability and, hence, maintainability. On the other hand, the loop initialization logic for the inner loop is now tightly bound to the loop itself, which has maintainability benefits.

Finally, when x is small we can bypass working with the larger values of v by determining what value of v we should start with for this particular conversion. However, we must initialize the higher digits of the BCD result to zero or otherwise deal with this issue. The following code is one way to do this.

w[0] = 1;

w[1] = 10;

w[2] = 100;

w[3] = 1000;

w[4] = 10000;

...

for (imax = 0; x>=w[imax]; imax++)

; // This makes the loop empty

for (i = DIGITS-1; i; i--)

{

bcd[i] = 0;

if(i<imax)

for (v=w[i]<<(k=3); k>=0; k--,v>>=1)

if (x >= v)

{

x -= v;

bcd[i] += 1<<k;

}

}

bcd[0] = x;

So how much of a performance gain is achieved by applying these enhancements? Tests using the five variations above were performed where the code was asked to convert each value from 0 through 99,999 and this was performed one hundred time -- hence the function was called ten million times. The value of the process clock was captured before and after the loop was started. The results were as follows:

| Variant | Clock Ticks |

| 1 | 3535 |

| 2 | 3765 |

| 3 | 3155 |

| 4 | 3145 |

| 5 | 3895 |

The fact that Variant #2 was noticeably slower than Variant #1 is a bit puzzling. It is true that Variant #2 performs one additional assignment operation (can you spot it?), but that shouldn't begin to account for the difference. A clear performance gain -- about 10% -- is realized by eliminating the division operation in Variants #3 and #4.

However, notice that a significant penalty was paid when we attempted to bypass the higher valued loops. Why? Certainly there is some overhead penalty associated with running the first loop, even though it is empty, in order to determine the most significant digit. The hope is that doing so will result in a greater savings by bypassing the inner loop associated with the digits we've thus determined must be zero. For values restricted to only five digits, it's possible the savings simply aren't enough to outweigh the penalty.

After modifying the code to handle up to eight BCD digits (DIGITS = 8 and VMAX = 10,000,000), the results were as follows:

| Variant | Clock Ticks |

| 1 | 5247 |

| 2 | 5669 |

| 3 | 4987 |

| 4 | 6289 |

| 5 | 5298 |

Notice that the loop trimming done by Variant #5 is beginning to produce results. However, now Variant #4 is unexpectedly slow.

Out of curiosity, a test was performed on Variant #2 wherein the outer for() loop's bookkeeping was simplified and the associated code added to the loop body itself. The result was that the loop ran faster than Variant #1, but a small margin, even if the additional assignment was added manually. This implies that the cost associated with Variant #2 wasn't the additional assignment, but rather that the for() setup had gotten too involved for the optimizing compiler to deal with effectively. The code for the new version of Variant #2 looks like this:

for (i=DIGITS-1, v=VMAX; i; i--, v/=10)

{

bcd[i] = 0;

while (x >= v)

{

x -= v;

bcd[i]++;

}

}

bcd[i] = 0; // Extraneous assignment

bcd[0] = x;

Turning attention to Variant #4's poor performance, it was first modified both to simplify the for() loop's setup resulting and to eliminate the need to compute (1<<k) each time. This was done by redefining k from being the exponent of the digit's value to being the value directly. Hence where k used to take on the values {3,2,1,0} it now simply takes on the values {8,4,2,1}.

for (i = DIGITS-1; i; i--)

{

bcd[i] = 0;

v = w[i]<<3;

for (k=8; k>0; k>>=1,v>>=1)

{

if (x >= v)

{

x -= v;

bcd[i] += k;

}

}

}

bcd[0] = x;

This did result in some improvement, however not much. A more radical approach was to unfold the inner loop entirely.

for (i = DIGITS-1; i; i--)

{

bcd[i] = 0;

v = w[i]<<3;

if (x >= v)

{

x -= v;

bcd[i] += 8;

}

v>>=1;

if (x >= v)

{

x -= v;

bcd[i] += 4;

}

v>>=1;

if (x >= v)

{

x -= v;

bcd[i] += 2;

}

v>>=1;

if (x >= v)

{

x -= v;

bcd[i] += 1;

}

}

bcd[0] = x;

This cut the time nearly in half, emphasizing the value of eliminating inner loops and writing the code in-line, where possible, if speed is critical.

The final performance measurements are shown below.

| Variant | Clock Ticks |

| 1 | 5207 |

| 2 | 5188 |

| 3 | 4867 |

| 4 | 6129 |

| 5 | 5198 |

| 6 | 5979 |

| 7 | 3044 |

The next method of converting from binary to BCD relies on an understanding of the mechanics of adding and doubling BCD values, therefore this section is intended to help establish that understanding.

Adding two BCD values can be done exactly the same way that most people learned to add two decimal integers in grade school, namely start from the right side and add the two right-most digits. If the value is greater than 9, a carry is generated. A 'carry' involves nothing more than subtracting ten from the result of the addition and adding one to the next column to the left.

Multiplying two arbitrary integers together, either in straight binary or in BCD, is not trivial. However, if we restrict ourselves to only multiplying an arbitrary integer by 2, it becomes very simple. Indeed, in straight binary is simply involves shifting the entire number one bit to the left and setting the right-most bit to zero. In BCD it is a little more complicated, but not much. The necessary steps can be gleaned by considering a BCD value whose digits are ABCD. Recall that each digit is represented by a 4-bit straight binary encoding that can have the values between 0 and 9, inclusive. Performing the multiplication in the basic fashion we simply multiply each digit by 2 and, if the result is greater than nine we subtract ten from the result for that digit and add one to the next digit to the left.

| X= | A | B | C | D |

| 2X= | 2A | 2B | 2C | 2D |

| if (2C>9) | 2A | 2B+1 | 2C-10 | 2D |

On a digit-by-digit basis we can perform the multiplication-by-two by simply right shifting the bits that represent the digit by one bit. This is possible since, within each digit, the representation is straight binary. However, the result could conceivably be a 5-bit value and we only have four bits to work with. Let's focus on a single BCD digit and see what the possibilities are:

Y = 2X+Cin

where X is the BCD value in a particular digit location and Cin is the value of the carry from the location to its right (hence, Cin is either 0 or 1).

If 2X is less than 10, then no carry occurs out of this position to the position to the right, regardless of the value of Cin. Conversely, if 2X is greater than or equal to 10 a carry does occur, again regardless of the value of Cin. The reason that Cin doesn't have a role in determining whether a carry occurs is that for this to happen the value would have had to be a 9 without the carry and a 10 with the carry. But the value without the carry, since we are multiplying by two, is even. Thus, if a carry is going to happen from a given column it will happen without regard to the column that precedes it.

If 2X is greater than 10, then a carry does occur and we need to subtract ten from the result. Thus we have

IF (2X < 10): Y = 2X + Cin; Cout = 0

ELSE: Y = (2X-10) + Cin; Cout = 1

Now, while 2X might be a 5-bit number, we know that it won't be in the IF portion of the above algorithm. In the ELSE portion of the algorithm we know that the final result, namely (2X-10)+Cin, will be, at most, a 4-bit number since it will represent a valid decimal digit. Thus we don't need to worry about storing the fifth bit if we can devise a way of computing (2X-10)+Cin without it since it won't survive to the final result. This is were a bit of trickery can pay huge dividends.

First, notice that we can perform the multiply-by-two operation on all of the digits at once by packing them into a sufficiently wide register and shifting them all to the left one bit. Now notice that, if the Cout bit from each BCD position is placed in the most significant bit that it is automatically added to the result for the bit to its left when the shift occurs. You might think that we are trying to store two bits of information in the same bit location if we attempt this, but the key is to recognize that we don't have to perform the multiplication before checking to see if a carry will occur and making the necessary adjustments since the test (2X<10) is the same as the test (X<5). With this, we can rewrite our algorithm as:

IF (X < 5): Y = 2X + Cin; Cout = 0

ELSE: Y = 2(X-5) + Cin; Cout = 1

It may not look like we have done anything, but we have set the stage for the trick. First notice that, in the IF clause, the largest value that X can be is 4, hence the most significant bit is zero and we can place the Cout bit in this location. This is trivial since, in this case, Cout is also zero. Now consider the ELSE clause; the largest value that (X-5) can have is also 4, meaning again that the most significant bit will be zero and that we can again place the Cout bit in this location. Since, in this case, the Cout bit is 1, we do this by adding 8 to (X-5) leaving us with (X+3). We are then left with:

IF (X < 5): Y = 2X + Cin

ELSE: Y = 2(X+3) + Cin

Our final algorithm is therefore very simple: In order to double a BCD value, first examine each BCD location and, if the value in that location is greater than 4, add 3 to it. Then shift the entire bit string one bit to the left, which both performs the multiply-by-two and the addition of the Cin bit. Since the largest value we can obtain as a result of adding 3 is 9+3=12, we can perform the operation on each 4-bit BCD position independently and simultaneously since there will not be any interaction between positions.

The logic necessary for the conditional addition of three to each BCD position can be implemented in a 4-bit wide, 4-bit deep look-up table as follows:

| X3 | X2 | X1 | X0 | Y3 | Y2 | Y1 | Y0 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 |

| 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 |

| 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 |

| 0 | 1 | 0 | 1 | 1 | 0 | 0 | 0 |

| 0 | 1 | 1 | 0 | 1 | 0 | 0 | 1 |

| 0 | 1 | 1 | 1 | 1 | 0 | 1 | 0 |

| 1 | 0 | 0 | 0 | 1 | 0 | 1 | 1 |

| 1 | 0 | 0 | 1 | 1 | 1 | 0 | 0 |

| 1 | 0 | 1 | 0 | X | X | X | X |

| 1 | 0 | 1 | 1 | X | X | X | X |

| 1 | 1 | 0 | 0 | X | X | X | X |

| 1 | 1 | 0 | 1 | X | X | X | X |

| 1 | 1 | 1 | 0 | X | X | X | X |

| 1 | 1 | 1 | 1 | X | X | X | X |

Since we are starting with a valid BCD representation, we only need to worry about entries in the table corresponding to values of X less than 10.

Let's examine our algorithm for doubling a BCD number in a more generic setting, namely what would we have to do to double a BCB number where BCB means Binary-Coded Base-B number? For instance, let's say that we wanted to express numbers in Binary-Coded Base-26 so that we could map the results directly to the English alphabet. What changes would need to be made to our algorithm?

Following the same development as before we have the following:

Notice that the algorithm still ends up adding 16 - 13 = 3 if we need to adjust the value in a digit that will result in a carry when doubled. Does this mean that the 3 is somehow always the value that needs to be added? No, this is just coincidental. What if we wanted to use base-60? Our development would then become.

So our magic number in this case is 32 - 30 = 2. Now let's generalize this algorithm to any base-B.

So our magic number for the generic case is

m = 2(k-1) - (B/2)

This can be re-written as

m = (2k - B)/2

Notice that this has a very simple and easy to remember interpretation. If a digit, before doubling, if at least half of the number base then adjust it by adding half of the difference between the binary base associated with that digit and the actual base being used. For our last example, a base-60 digit needs 6 bits which, in binary, would correspond to a base-64 digit. Half of the difference between these two bases is 2, which if the value that must be added if the initial value of the digit is 30 or more.

Since m has to be an integer, the numerator in the last expression above must be even. Since 2k is even for integer k, that means that B must be even. The conclusion is that this algorithm only works, at least in this simple form, for even number bases.

The value of a number in a normal positional numbering system, whether it be decimal or binary or BCD, can be expressed as:

X = D0 + B(D1 + B(D2 + B(D3 + ... + B(Dn-2 + B(Dn-1)) ... )))

Where B is the number base, D0 is the least significant digit, and Dn-1 is the most significant.

We can thus build up a value in one base from the digits in another base by simply carrying out the operations indicated above. We initialize our converted result to zero and then, starting with the most significant digit of the number we are converting from, add each digit to the partial result and multiply by the number base we are converting from while performing all of the operations in the number base we are converting two. We stop as soon as we have added the least significant digit without performing another multiplication by the old number base. For more details on this method, as well as others, see Number Base Conversions.

To convert from binary to BCD we can use the following simple algorithm:

We already know how to multiply a BCD value by two very efficiently and also know that we can add in a value of 0 or 1 to the least significant digit at the same time. In fact, simply shifting the contents of the binary value into the BCD value performs the multiplication and carry operations. All we must do is examine each BCD digit before each shift operation and add three to those values that are greater than four. With this in mind, our algorithm becomes:

While the above algorithm can certainly be implemented in a program running on a traditional processor, it turns out that it is extremely inefficient. The reason is that the operations involved are ones that most processors are not well equipped to handle efficiently because they involve tearing down into the bits that make up the values and manipulating them directly. This process, known as "bit banging", is very powerful but frequently very slow because processors are optimized to work on entire multi-byte values.

However, the same is not true of other platforms that this algorithm might be targeted for. In particular, programmable logic devices, such as FPGA's (Field Programmable Gate Arrays) are optimized for bit level manipulation and, more importantly, are designed for concurrency. For instance, if we have a six-digit BCD value, a normal program would have to perform the same operation one each bit but it must do so one bit at a time, i.e., sequentially. This is true even though we know that the operations on each digit are independent of the operations on any other digit. However, because we can configure an FPGA to place multiple copies of the necessary logic blocks into the design, we can perform all of the operations at the same time, i.e., concurrently. Furthermore, we can configure the logic to immediately pass the output of one step to the input of the next step without requiring a synchronizing event (i.e., the clock). This is possible because the computations can be done combinatorially. The result is that we can perform a complete conversion in one clock cycle, provided that period of the clock is long enough to permit the signals to propagate throughout the logic. If they aren't, then we can use registers and techniques such as iteration or pipelining to break the logic operations into pieces that are, individually, fast enough to be performed in the necessary amount of time.

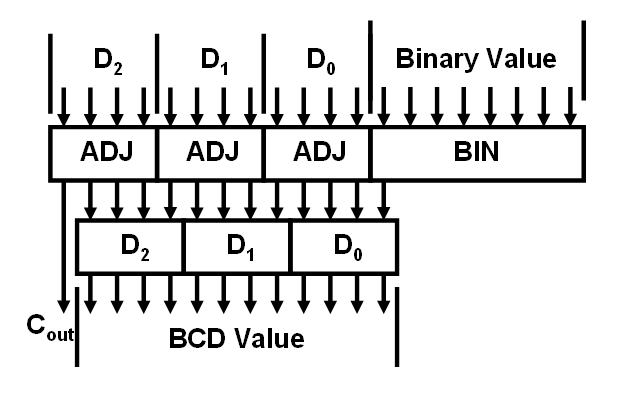

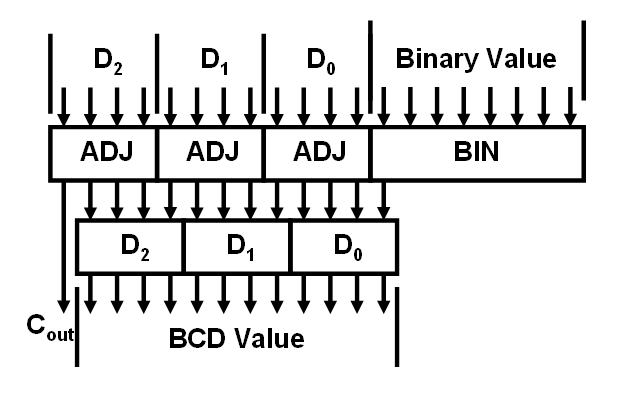

Perhaps the simplest architecture to implement this algorithm is shown below for the case of an 8-bit binary to 3-digit BCD converter. Note that the BIN block is a typical shift register in which the binary value to be converted is loaded into the register on the first clock and the contents are shifted to the left on each subsequent clock. The BCD register is nothing more than a set of three 4-bit registers. The outputs of each register are fed through the adjuster block, which implements the "add 3 if greater than 4" logic and fed back into the BCD registers but shifted by one in order to perform the "multiply-by-2" operation. At the same time, the msb of the binary shift register is brought into the lsb of the least significant digit of the BCD register in order to perform the "add the next bit" operations. Just as we must be able to load the binary shift register with an initial value on the first clock, we also have to initialize the BCD registers to all zeros on the first clock. However, this is easily done by simply performing a bitwise AND operation with a 0 if we want to initialize it and a 1 if we want to continue running. This can be done either before or after the adjuster blocks since they output a zero if the input is zero.

The above circuit will perform the complete conversion in exactly eight clock cycles. Either the conversion process must be stopped or the output at that point captured.

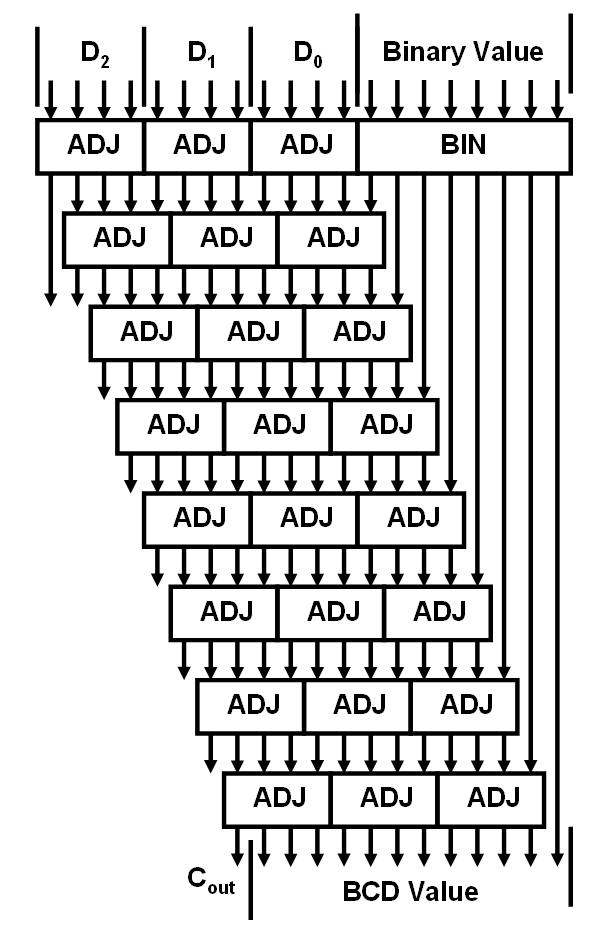

Let's now expand this circuit so that it is completely combinatorial (i.e., has no clock) and therefore produces the final BCD result immediately (subject only to the propagation delays through the logic circuits).

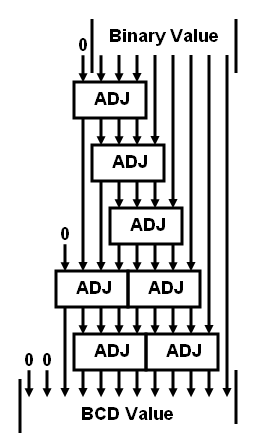

This above diagram contains far more adjuster blocks than are needed and we'll trim this shortly. Since we are now only feeding the values forward, the BCD values at the top of the figure only need to be the initialization values, namely zero. Furthermore, we no longer need to load the binary value into a shift register because we are picking off the bits directly and sending them to the appropriate level. Finally, since the adjuster logic echoes its input to its output unless the input value is greater than four, we can eliminate any adjuster blocks whose upper two input bits are zero. This results in the following final circuit.

The fact that the upper two bits of the most significant digit are hard tied to zero makes sense since the largest value that an 8-bit binary value can represent is 255. Notice that there are only five levels of logic that the signals must propagate through, thus this circuit can operate very fast.

So how many propagation delays are there for an N-bit binary value and how many ADJ blocks are needed? The first part is trivial to answer since each additional bit will result in an additional delay. We get the first three bits propagation free since a 3-bit binary number is its own BCD representation. So the propagation delay is (N-3) levels.

To determine how many ADJ blocks are needed, notice that we have to add a new column of ADJ blocks every third level and that, once added, it continues to add another block at each level from that point forward. Hence the first column will have (N-3) blocks, the second column (N-6) blocks, and so one. In general, the cth column will have (N-3c). The number of columns, C, can be determined by noting that we pick up the first column after 3 bits, the second column after 6 bits, and so on. Hence the number of columns is simply N/3 where we are performing integer division - another way of expressing this is C=int(N/3). Hence, for eight bits, C is equal to (8/3) which is 2. Note that, if N is evenly divisible by 3, we get a number of columns that is one greater than what is really needed. However, also notice that the number of ADJ blocks in that last column is zero, so the computation yields the correct answer. The end result is that the number of ADJ blocks needed is:

K = Number of ADJ blocks = C(N-1.5(C+1)) where C = int(N/3)

For N=8, this yields C=2 and K=7. For a 16-bit converter this yields N=16, C=5, K=35 ADJ blocks and 13 propagation delays.

A quick estimate of the number of blocks can be obtained by ignoring the integer division and simply substituting C=N/3 into the expression for K. This results in

K = N(N-3)/6 = (N^2)/6 - N/2

Note that this expression is exact if N is evenly divisibly by 3 and slightly overstates the value of K otherwise.

Notice in the diagram for the 8-bit BCD converter that the least significant bit of the binary value can a only affect the least significant digit of the BCD representation. This makes sense since changing this bit changes the value represented between an even number and the next higher odd number; thus only the one's digit is affected. Would you expect this to be true if this converter were designed to convert from binary to, say, base seven?

Now notice that the two least significant bits of the binary value can affect, at most, the two least significant digits of the BCD representation. Does this make sense?

In fact, there is a pattern that develops as you examine this behavior. In general, the least significant M bits in a binary representation can influence, at most, the M least significant digits in a BCD representation. Can you prove this mathematically?